How AI is making them better, harder to detect, and easier to make.

By Dennis Shelly

Technology and Artificial Intelligence are becoming increasingly capable of simulating/faking or mimicking reality. For example, the modern film industry depends heavily on computer-generated sets, scenery, and actors in place of the practical locations, props, and people that were once common and required. These scenes are often indistinguishable from reality. Deepfake technology has recently gained a lot of media attention due to devious intentions. Deepfakes, the most recent advancement in computer imagery, are made when artificial intelligence (AI) is trained to swap out one person’s appearance for another in a recorded video or an image. According to a report released last year, over 85,000 harmful deepfake videos have been detected up to December 2021, with the number doubling every six months since observations started in December 2018.

What are deepfakes?

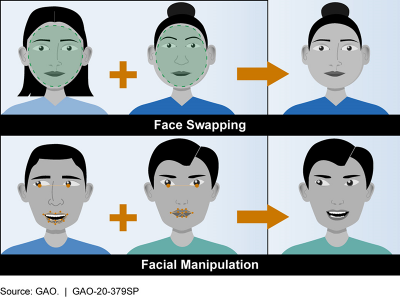

A deepfake is a media file—typically an image, video, or speech depicting a human subject—that has been deceptively altered using deep neural networks (DNNs) to change a person’s identity. Typically, this alteration takes the form of a “face swap,” in which the identification of a source subject is swapped onto that of a different subject. The facial expressions and head movements stay consistent, but the appearance in the video is that of the source.

How AI and Machine Learning make deepfakes easier to make and harder to detect

There are several techniques for making deepfakes, but the most common uses deep neural networks with autoencoders and a face-swapping technique. You’ll need a target video to serve as the basis for the deepfake, followed by a compilation of video clips of the individual you want to insert into the target. The autoencoder is a deep learning AI tool that studies the video clips to comprehend what the person looks like from various angles and environmental conditions and then maps that person onto the individual in the target video by identifying similar characteristics.

Generative Adversarial Networks (GANs), another type of machine learning, can also be incorporated into the process. GANs detect and correct any deepfake imperfections over the course of several rounds, making it more challenging for deepfake detectors to detect them. Relying on the study of large amounts of data to learn how to create new examples that imitate the real thing, with extremely accurate outcomes, GANs are becoming a common method for creating deepfakes.

The Chinese app Zao, DeepFace Lab, FaceApp (a photo editing app with built-in AI techniques), Face Swap, and the DeepNude, a particularly risky app that produced fake nude images of women, all make creating deepfakes simple even for beginners. Similarly, Deepfakes Web, Familiar, Impressions, and Alethea.AI are more sophisticated web applications that allow users to create online deep fake videos using the web.

Some AI experts are worried about the risks posed by the videos and the easily accessible technology that made them, and this has led to new questions about the readiness of social media companies to control the spread of digital fakery. Disinformation watchdogs are also preparing for a surge of deepfakes that may mislead viewers or make it more difficult to tell what is real on the internet.

Some AI experts are worried about the risks posed by the videos and the easily accessible technology that made them, and this has led to new questions about the readiness of social media companies to control the spread of digital fakery. Disinformation watchdogs are also preparing for a surge of deepfakes that may mislead viewers or make it more difficult to tell what is real on the internet.

How Deepfakes can be detected

As deepfakes become more prevalent, society will most likely have to adapt to detecting deepfake videos like internet users have adapted to detecting other types of fake news. In many cases, such as in cybercrime, more deepfake technology needs to surface in order to identify and prevent it from spreading, which can set off an endless cycle and possibly cause even more damage.

Deepfakes can be identified by a few indicators:

- Some deepfakes struggle to accurately animate face features, which leads to videos where the subject either doesn’t blink at all or blinks either excessively or strangely. However, after researchers at the University of Albany published a study identifying the blinking anomaly, new deepfakes with this improved feature were released that no longer face this issue.

- Look for complexion or hair issues, as well as features that appear to be blurrier than the surroundings in which they are positioned. The focus may appear overly fuzzy.

- Deepfake algorithms often retain the lighting from the video segments used as models for the fake video, which is a poor fit for the lighting in the target video.

- The audio may not appear to match the individual, particularly if the video was faked but the original audio was not as carefully manipulated.

To Conclude

The competition between making and detecting deepfakes is not going away anytime soon. Deepfakes will become simpler to create, more realistic, and extremely difficult to detect. The current delay in synthesis due to a lack of details will be solved by accommodating GAN models. With improvements in hardware and lighter-weight neural network designs, training and generating times will be decreased. In the past few years, we have seen the development of new algorithms that can give a much higher degree of realism or operate in near real-time. Deepfake videos are evolving beyond simple face swapping to include whole-head synthesis (head puppetry), combined audiovisual synthesis, and even whole-body synthesis.

Have more questions about AI, Deepfakes, or protecting yourself from these new types of technologies and threats? We can help! Our Eggsperts are eggcellent in the newest technologies and are standing by. Please contact us by visiting our website at www.eggheadit.com, by calling (760) 205-0105, or by emailing us at tech@eggheadit.com with your questions, or suggestions for our next article.

IT | Networks | Security | Voice | Data